13 February 2024

We're often asked questions about the seemingly odd behaviour of some models:

- Why does my model find different solutions each time I run it?

- The solver says the different solutions are all optimal. But some solutions are better than others. How can that be true?

- What can I do to make my model find the overall best solution?

In most cases, the answers are:

- The model is non-linear with multiple local optima. The solver may use a process that includes random elements that lead it to different neighbourhoods of the feasible region.

- Each solution is locally optimal, meaning that it is the best solution in that neighbourhood of the feasible region.

- To find the overall best solution, either use a solver than finds globally optimal solutions for that type of model, or solve the model multiple times, with different initial conditions, to make it more likely that the solver finds the best neighbourhood.

These answers generally require explanation, So, in this article, we explore some aspects of solver behaviour when solving non-linear optimization models. Our goal is to provide insights into what the solvers are doing, why they may find different solutions, and how we can improve our chances of finding at least a good, and hopefully a globally optimal, solution.

Download the models

The models described in this series of articles are built in Python using the Pyomo library.

The files are available on GitHub.

Situation

Just one variable

We want to examine some aspects of how solvers behave when solving non-linear optimization models. These aspects commonly cause confusion and frustration.

Most models have many variables, which makes it difficult to understand what's happening. To make the situation easy to illustrate, we focus on a simple formulation that has only one variable with simple bounds: \(0 \le x \le n\), where \(x\) is continuous. There are no other constraints. The code for our variable is shown in Figure 1.

# Set bounds on x

Model.xMin = 0

Model.xMax = 8

Model.x = pyo.Var(domain = pyo.Reals, bounds = (Model.xMin, Model.xMax), initialize = 0)Choice of three objective functions

To illustrate different behaviours, we provide a choice of three objective functions:

- Function 1, linear: \(z = a \times x + c\). This convex objective function has a single, global optima. Linear models are generally the easiest to solve.

- Function 2, simple non-linear: \(z = h_1 \times e^{-((x - p_1)^2)}\). This non-convex function has a single, global optima.

- Function 3, complex non-linear: \(z = \sum_{i=1}^{t} h_i \times e^{-((x - p_i)^2)}\). This more complex non-convex function has multiple optima.

We focus on maximizing these objective functions, though the same types of behaviours also apply to minimizing.

For the non-linear objective functions, note that we include extra parentheses to indicate that the negative sign is applied after the square is calculated.

The objective function is chosen in the main notebook for each model, like Function = 2. The code for the objective function implementations is shown in Figure 2.

# Define objective function choices

if Function == 1:

z = 1.5 * Model.x + 1 # Linear

elif Function == 2:

z = 1 * pyo.exp(-((Model.x - 2)**2)) # Non-linear bump

else:

Height = [-2.500, 2.000, 1.750, -1.000] # Negative height indicates a dip

Position = [ 1.000, 2.750, 5.000, 7.000]

z = 0

for t in range(0, len(Position)):

z += Height[t] * pyo.exp(-((Model.x - Position[t])**2)) # Non-linear bumps and dips

Model.Obj = pyo.Objective(expr = z, sense = pyo.maximize)Our non-linear objective functions have the form \(e^{-x}\). Note that we cannot use the standard Python exp function, as the variable values are not defined when this code is evaluated. Instead, we must use the Pyomo exp function. The same applies for other mathematical functions like sin and cos used in model formulations.

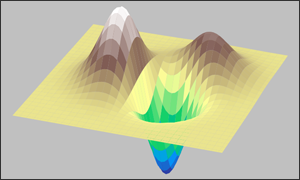

As an aside, our non-linear objective functions are differentiable. Therefore, we don't need a solver to find the optima, as we can solve these problems using calculus. But that isn't usually the situation. We can also extend the function to three dimensions, like the image at the top of this article. The code to produce that image is available on GitHub.

Choice of solvers

To see how different solvers behave, we run our models with a choice of three solvers, each of which is installed locally:

- Bonmin (Basic Open-source Nonlinear Mixed INteger programming). Bonmin is open source code for finding locally optimal solutions for general Mixed Integer Non-Linear Programming (MINLP) problems.

- Couenne (Convex Over and Under ENvelopes for Nonlinear Estimation). Couenne is open source and uses a branch & bound algorithm for solving Mixed-Integer Non-linear Programming (MINLP) problems. It aims to find global optima of non-convex MINLP problems.

- Ipopt (Interior Point Optimizer, pronounced "Eye-Pea-Opt"). Ipopt is an open source software package for large-scale continuous non-linear optimization. It implements an interior point line search filter method that aims to find a local solution.

Both Bonmin and Couenne use Ipopt as part of their toolbox for solving models.

The choice of solver is specified in the main notebook for each model, like Model.Engine = 'ipopt'.

Models

We have four models, to show various aspects of solver behaviour. Each model can use any of the objective function options. Model 2 can use only the Ipopt solver, while the other models can use any of the listed solvers. That is:

- Model 1: Standard. Straightforward design and typical implementation of the situation.

- Model 2: Iterations. Like Model 1, except that we explicitly control the iterations of the Ipopt solver to show how it steps towards a solution.

- Model 3: Search. We iterate over a range of initial values for the variable, in a type of grid search, in the hope of finding a better solution.

- Model 4: Multistart. Uses Pyomo's

multistartfeature to search for a better solution.

All the models share the imports.ipynb and objective-functions.ipynb modules. The models differ only in their main file and their interaction with the solvers.

Exploration of solver behaviours

Different solvers and different initial conditions may matter, a lot

Model 1 is a standard, straightforward implementation. We specify the variable, objective function, and solver we want. Then we set up the chosen solver, call the solver, and print the result. In some circumstances, the solver's starting point matters. Therefore, we have a parameter for specifying the initial value of the \(x\) variable. We start with Initialx = 0.

Model 1's solutions, found by each solver for each objective function, are shown in Figure 3. That is:

- For objective function 1, each of the solvers finds a solution where \(x = 8.00\) and the objective function value is \(z = 13.00\). This is expected, as our linear objective function is \(1.5 \times x + 1\), with bounds of \(0 \le x \le 8\). The maximum of 13 occurs at the upper bound of 8.

- For objective function 2, Couenne and Ipopt find the same solution. Bonmin finds a different solution, with an objective function value of zero, which is clearly not as good as the value found by the other two solvers.

- For objective function 3, Bonmin and Ipopt return the initial \(x\) value of zero, which isn't helpful. Couenne finds a better solution.

An issue here is that all the solutions in Figure 3 are reported as "optimal" yet, for the non-linear functions, some solutions are obviously better than others.

Let's try different initial values for \(x\). Figure 4 shows the results for an initial value of 4, which is in the middle of the bounds for \(x\). This time, the solvers all find the same solutions for objective functions 1 and 2. For objective function 3, Bonmin and Ipopt find the same good solution, though Couenne finds a slightly better solution.

Figure 5 shows the results for an initial value of 8, which is at the upper end of the allowed range for \(x\). Ipopt's solution for objective function 2 is worse than previously. For objective function 3, Bonmin and Ipopt both return the initial \(x\) value of 8 and objective function values that are worse than the previous attempts. Couenne returns the same solution for all initial \(x\) values.

We know that the solution for the linear objective function 1 is globally optimal. But some of the solutions for objective functions 2 and 3 are clearly better than others. Given the results so far, we don't know if any of the solutions for objective functions 2 and 3 are globally optimal.

This is where things often get frustrating for the modeller, as the solvers identify all the solutions as optimal. But these "optimal" solutions vary, depending on our choice of solver and the initial value we assign the variable. The linear model is OK, but it is hard to have confidence in the solutions for the non-linear models.

Examining the solver iterations can show what's happening

To gain some insight into what's going on with the non-linear models, Model 2 allows us to examine each step that the solver takes towards finding a solution. Note that Model 2 only works with the Ipopt solver.

That is, the code creates an options file to limit the number of iterations that Ipopt is allowed to do, using the max_iter option. It starts with zero iterations and increments by 1 until the solver either reaches the specified maximum number of iterations or it finds an optimal solution.

For objective function 2, with an initial \(x\) value of zero, the result is shown in Figure 6. Ipopt finds an optimal solution after 10 iterations. For less than 10 iterations, Model 2 stops before finding an optimal solution.

Iterations x Objective Condition

-------------------------------------------------

0 0.0100 0.0191 maxIterations

1 0.1108 0.0282 maxIterations

2 0.4863 0.1011 maxIterations

3 0.6399 0.1572 maxIterations

4 2.4615 0.8082 maxIterations

5 1.6658 0.8943 maxIterations

6 2.1004 0.9900 maxIterations

7 1.9986 1.0000 maxIterations

8 2.0000 1.0000 maxIterations

9 2.0000 1.0000 maxIterations

10 2.0000 1.0000 optimal

In Figure 7 we plot the iterations on a chart that also shows the whole objective function. It is important to note that the solver cannot see the whole objective function – it sees the objective function values only at the points where it evaluates the function.

From an initial position of \(x = 0\), we see that Ipopt takes some small steps, climbing up the objective function. Then it takes a larger step, to \(x = 2.4615\). It evaluates the objective function at that point, observing that the slope has changed sign. Therefore, Ipopt reverses direction, stepping back somewhat. The slope changes again, so Ipopt also changes direction. This process repeats a few more times, until the solver converges to the point \(x = 2.000, z = 1.000\), where the slope of the objective function is zero. At that point, the solver has found a local maximum, so it stops. For this objective function, the solution is also a global maximum.

The zig-zag pattern seen in Figure 7 is very common. The use of larger steps is also a key part of how a solver can find solutions quickly – if the solver always takes small steps, then it would often need many steps to converge to a solution, which may be a very slow process. Taking small steps also means that the solver could not escape from the starting neighbourhood, which is important for more complex situations like objective function 3.

Ipopt follows a similar pattern when the initial \(x\) is set to 4, climbing up the objective function then zig-zagging to the optimum.

But what happens when the initial \(x\) is set to 8? Ipopt's iterations are shown in Figure 8.

Iterations x Objective Condition

-------------------------------------------------

0 7.9200 0.0000 maxIterations

1 7.8220 0.0000 maxIterations

2 7.7139 0.0000 maxIterations

3 7.5164 0.0000 maxIterations

4 7.4733 0.0000 maxIterations

5 7.4685 0.0000 optimal

The solver makes little progress, stopping after a few iterations, a long way from the optimal \(x\) of 2.000.

So why did the solver stop there? The objective function values provide a clue: they all appear to be zero, to 4 decimal places. But they're not quite zero. For example, at \(x = 7.4685\), \(z = 1.03 \times 10^{-13}\). Near the bound \(x = 8\), the objective function is very close to flat and almost zero, so the solver doesn't gain much traction. Since the slope is indistinguishable from zero (within reasonable numerical precision tolerance), the solver reports the last solution as optimal.

What the solver doesn't know can hurt it

But what about objective function 3? Figure 9 shows the path taken by Ipopt in finding a solution to objective function 3, given an initial \(x\) value of 4. We can see that the local minimum in that neighbourhood occurs at \(x = 3.9235\), just to the left of the initial point. But the solver doesn't know that. It sees that the objective function slopes upwards to the right. So, since we're maximizing, the solver heads up the nearest "hill" to the right, taking it to the local optima indicated in the figure. There is no zig-zagging in this instance.

The problem is that the optima in the other direction is better. But Ipopt didn't look in that direction. Couenne, conversely, applies some additional processes to explore the solution space, so it finds the global optima for this model irrespective of the initial \(x\) value.

Figure 9 also provides hints about why Ipopt failed to find useful solutions when given initial \(x\) values of 0 and 8. In both cases, the solver takes tentative steps away from the initial value, but finds that the objective function value is getting worse. So, it turns around and heads uphill – returning to \(x = 0\) when starting at that end, or returning to \(x = 8\) when starting at the other end.

Remember that the only knowledge the solver has about the shape of a non-linear objective function is at the points it evaluates. Therefore, the solver doesn't see that there are better solutions if only it went a bit further towards the middle of the allowed range.

A grid search might help

We've observed that different initial variable values can lead to better solutions. So, one obvious way to improve our chances of finding globally optimal solutions is to systematically search the solution space.

That's exactly what Model 3 does. Specifically, Model 3 iterates over a set of initial \(x\) values such as \(x = 0, 1, ..., 7, 8\).

The result for objective function 3, using the Ipopt solver, is shown in Figure 10. While iterating through the initial values, the program notes the best solution found and prints that result at the end.

Initialx x Objective Condition ---------------------------------------------- 0.0000 0.0000 -0.7347 optimal 1.0000 2.8317 1.9328 optimal 2.0000 2.8317 1.9328 optimal 3.0000 2.8317 1.9328 optimal 4.0000 4.9627 1.7468 optimal 5.0000 4.9627 1.7468 optimal 6.0000 2.8317 1.9328 optimal 7.0000 2.8317 1.9328 optimal 8.0000 8.0000 -0.3677 optimal Best objective = 1.9328 at x = 2.8317

In this example, five of our nine initial values returned the globally optimal solution. But there is no guarantee that any of the initial values will find the globally optimal solution. The more initial values we try, the better the odds of finding a global optima, or at least good solution. But the program will take longer to run, so there's a limit to how extensive our search can be – especially for models that take a long time to solve.

Note that initial values of 6 and 7 return the global maximum solution by jumping past the local optima at around \(x = 5\). That's fortunate, but the solver may also jump over the global maximum to find a local maximum.

A variation of this search technique is to re-run the model with random initial variables values. This can be especially useful when the model has many variables with too many combinations of values to use a systematic grid search.

Pyomo's multistart feature is useful

We often use the search technique, described above, when a solver has difficulty finding a good solution or when we suspect that a better solution might exist. Helpfully, Pyomo includes a feature that automatically applies a similar technique: multistart.

Pyomo's multistart feature, as the name suggests, restarts a model a specified number of times, using different initial values for the variables each time. The exact details of how it chooses the initial values are buried deep in the source code, though there is some documentation.

Setting up multistart is straightforward, with our Model 4 implementation shown in Figure 11. We just need to specify that we want to use multistart, then provide options that include the solver to use (slightly different to how use usually specify the solver), the number of multistart repeats to perform (we just specify a constant Model.Repeats), and any other appropriate options.

# Create the Solver object for a local solver

def SetUpSolver(Model):

Solver = pyo.SolverFactory('multistart')

return Solver, Model

# Call a local solver using multi-start

def CallSolver(Solver, Model):

Results = Solver.solve(Model, solver = Model.Engine, suppress_unbounded_warning = True, iterations = Model.Repeats)

return Results, ModelFor objective function 3 and Ipopt, the result is shown in Figure 12. Even though we specified an initial \(x\) of zero, Ipopt finds the global optima (unlike the previous models). By varying the Model.Repeats value, we discover that Ipopt needs four restarts to find the global maximum in this case. Bonmin needs only one restart to find the global maximum. In general, it is difficult to know how many restarts is enough – you'll need to experiment to determine an appropriate number for a specific model.

Multistart also works with Couenne, but it makes no difference in this situation as Couenne already finds the global maximum. For other situations, multistart might be useful in association with the Couenne solver.

Initial x Objective Condition

----------------------------------------------

0 2.8317 1.9328 optimal

Solutions can be very sensitive to initial conditions

Not only can different initial values lead to different solutions, for many models a small difference in the initial values can lead to entirely different solutions.

For example, using Model 2, we can see the iterations for Ipopt solving objective function 3 with initial values of 7.0 and 7.1, as shown in Figure 13.

Our initial points are slightly either size of the local minimum in that neighbourhood, which is at \(x = 7.0532\). But these very similar initial conditions lead to very different solutions. That is:

- With \(Initialx = 7.0\), the solver heads up the nearest hill to the left, jumps past the local maximum, finding a global maximum of \(z = 1.9328\) at \(x = 2.8317\).

- With \(Initialx = 7.1\), the solver takes a completely different path, heading up the nearest hill to the right, finding a maximum of \(z = -0.3677\) at \(x = 8.0000\).

Unless the solver looks in both directions from the starting point (or any other intermediate point), it will not know that there may be a better solution in the other direction.

Summary of key points

Solving non-linear models is hard. That's especially true as the number of variables increases, we include integer/binary variables, and if the model is non-convex.

In general, a solver might find a local optima rather than a global optima. We may not know if a given solution is a local or global optima.

Ipopt and Bonmin are local non-linear solvers, so they will stop when they find a local optima in the specified maximizing or minimizing direction.

Couenne is a global non-linear solver, so in theory – provided specific conditions are met – it should find a global optima. In practice, it struggles with some models, especially when the model is large and/or has integer/binary variables. Couenne applies a heuristic approach when the model is non-convex, so global optimality is not guaranteed in that situation. Some other global solvers, like the commercial Octeract or BARON solvers, may perform better.

Starting with different initial values for the variables may lead to different solutions. The solution that a solver finds might not be close to the start point. Conversely, starting close to the global optimum does not necessarily ensure that the solver finds that solution. The solution may also be a long way from optimal. Even worse, small differences in the initial variable values can lead to entirely different solutions.

Implementing a grid search of initial variable values, like we have in Model 3, can increase our odds of finding a good, or perhaps globally optimal, solution. But there is a trade-off, as we need to run the model potentially many times, which can be time-consuming. The grid search may be systematic or random, depending on the size of the solution space.

Pyomo's multistart feature, as used in Model 4, is a quick and convenient way to automatically broaden the search for a better solution. Multistart also has a run-time trade-off, as many initial values may be needed to find a good solution. Even then, there's no guarantee that the solver will find a good solution.

Conclusion

We've explored some aspects of how non-linear models behave, what selected solvers are doing while solving non-linear models, and how we can improve the solutions found.

Understanding of the challenges that non-linear solvers face may reduce modellers' confusion and frustration. We hope that we've helped, just a bit.

If you would like to know more about this model, or you want help with your own models, then please contact us.