18 April 2024

When doing analysis with an optimization model we commonly need to run many cases. For example, exploring a range of input value scenarios or evaluating a set of alternative designs.

In this article, we explore running optimization model cases in parallel. Specifically, we use the Python multiprocessing and mpi4py libraries to fully use the many CPU cores/threads in modern computers.

Our goals are to:

- Illustrate how to apply the multiprocessing and mpi4py libraries to running optimization model cases in parallel.

- Measure the performance of running cases in parallel compared with serially.

- Compare the performance of an old 4 core / 4 thread CPU with a new 20 core / 28 thread CPU, using the HiGHS solver.

As an example, we convert the serial model from a previous article, Warehouse space for free: Exogenous enumeration, to run multiple cases in parallel.

Download the models

The models described in this article are built in Python using the Pyomo library.

The files are available on GitHub.

Situation

The situation is described in the article Warehouse space for free: Non-linear model.

Given our goals, the situation being modelled isn't especially important. We use the warehouse rack and shelf design situation as an example because, to solve that model at the required scale, we made some variables exogenous to the model and then enumerated all combinations of those variables. That enumeration produced 158 cases, which we ran serially on a single PC, as described in Part 3 of the article series Warehouse space for free: Exogenous enumeration.

Since then, we've purchased a new PC, with more CPU cores/threads. This new PC provides an opportunity to test running cases in parallel, to see how much it can improve performance when running a model 158 times.

A very brief introduction to parallel computing

Multiple CPU cores/threads

Modern computers typically have many processing units, known as "cores", in their Central Processing Unit (CPU). These multiple cores enable the computer to run multiple tasks in parallel. Those tasks may be independent (such as separate applications) or a set of related tasks (such as processes of a single application). In greatly simplified terms, each task is known as a thread. Some CPU cores can run multiple (usually two) threads in parallel.

Many applications use multi-threading, at least to some extent – that is, running tasks in parallel, as different process threads. For example, the Gurobi solver sometimes uses multiple threads when solving an optimization model. Similarly, the OR-Tools CP-SAT solver operates in parallel by default. Conversely, the CBC and HiGHS solvers are mostly single-threaded (though HiGHS does some multi-threading, and there are plans for a fully multi-threaded version of HiGHS in 2024).

In general, managing parallel tasks is a tricky programming challenge. Fortunately, there exist libraries to help us.

Multiprocessing and mpi4py

There are several libraries for running Python code in parallel. We focus on two of the libraries:

- Multiprocessing runs multiple copies of a function, each with their own memory space. Each process is self-contained. Multiprocessing is generally used when we have independent tasks that we want to run in parallel.

- mpi4py uses the Message Passing Interface (MPI) standard for running entire programs in parallel. It uses an external environment to enable the passing of messages between the tasks. Coordination of interacting tasks is much more complex than running independent tasks. The Pyomo documentation recommends using mpi4py for running multiple model instances in parallel.

We want to run independent cases, with no interaction between the cases. This is simpler using the multiprocessing library, though mpi4py can also be used in that manner.

Model design and implementation

Simplify the model

We take Model 3 from a previous article and simplify it as follows:

- Convert the model to a Python text file, rather than a Jupyter notebook. This isn't necessary for using the multiprocessing library, but it is necessary for using MPI.

- Remove some features that aren't needed for our purpose here, including: writing the model to a file, option to call NEOS Server, alternative solvers, and some of the detailed output.

Three versions of Model 4

We then make three versions of our model:

- Serial. After making the changes described above, this is essentially a simpler version of the model used in our previous article. It runs the 158 cases serially.

- Multi. We modify the Serial model version to use the multiprocessing library. It runs the 158 cases in parallel, using up to the number of processes that the user specifies.

- MPI. We modify the Serial model version to use the mpi4py library. It also runs the 158 cases in parallel, using up to the number of processes that the user specifies.

Comparing the models

The models are substantially similar. All of the models have around 150 lines of code, including comments and blank lines. Importantly, the optimization modelling is identical – the code differences mostly relate to running the cases and writing output, so that's where we focus.

Serial model

In the Serial model, we have a simple loop that runs each case in sequence. The loop code is shown in Figure 1, where numRows is the number of cases (158, in this example). The tasks function creates a Pyomo model with the data for the current case, then calls the HiGHS solver to find an optimal solution for that case. Each case's solution is written after it is solved. We also note the best objective function value and best solution, which are printed after the loop has finished.

for case in range(0, numRows): # Run each of the tasks

Obj, Solution = tasks(case)

Summary += Solution + '\n'

currObj = round(Obj, 4)

if currObj < BestObj:

BestObj = currObj

BestCase = Solution

We run our Serial model from PowerShell, using python model-4-serial.py

Multiprocessing model

To set up the multiprocessing library, we just installed it using pip, that is: pip install multiprocessing.

The Multi model does essentially the same thing as the Serial model, but the mechanism differs. Instead of looping over a function call, in the file's main function we define what the multiprocessing library calls a Pool of processes. The pool creates a user-specified number of processes that it uses to run tasks in parallel.

To use the pool, we map it to a function and pass an iterable parameter to that function. As shown in Figure 2, we do this twice:

- First call: we map the pool to the

setupfunction and supply a single parameter. This does some one-off setup tasks, specifically to print the number of processes we're going to use. The iterable[processesToUse]has one element, so the pool is called with exactly one process. - Second call: we map the pool to the

tasksfunction and supply an iterable of the form [0, 1, 2, ..., 156, 157]. Because the iterable has 158 elements, thetasksfunction is called 158 times. Thetasksfunction is very similar to the function we used in the Serial version. It uses the current value of the iterable to extract the data for a case, creates a Pyomo model, then calls the HiGHS solver to find an optimal solution for that case.

Note that there is no explicit looping in our Multi model's code – that is handled by the multiprocessing library.

print(f'Number of cases: {numRows}')

cases = range(0, numRows) # Define each rack design as a case

pool = mp.Pool(processes = processesToUse) # Create a pool with the user-input number of processes

pool.map(func = setup, iterable = [processesToUse]) # Do one-off setup

result = pool.map(func = tasks, iterable = cases) # Run each of the tasks. Returned structure is in case order, irrespective of completion order

summaryOutput(result)We run our Multi model from PowerShell, using python model-4-multi.py

The Multi model's output looks like Figure 3. The first two lines are printed by the setup function. When the tasks are running, the tasks function outputs the start and end time of each task.

In this example, we have allowed up to 4 processes to use the available 28 cores/threads. The multiprocessing library decides the order in which to run the tasks. Our cases take different lengths of time to solve, so the tasks can complete in any order. When a task completes, a new task is automatically started immediately.

Number of cases: 158

Running 4 processes on 28 cores/threads

Start task 1 at 11:56:27

Start task 11 at 11:56:27

Start task 21 at 11:56:27

Start task 31 at 11:56:27

Complete task 21 at 11:57:01

Start task 22 at 11:57:01

Complete task 31 at 11:57:08

Start task 32 at 11:57:08

Complete task 11 at 11:57:15

Start task 12 at 11:57:15

Complete task 1 at 11:57:18

Start task 2 at 11:57:18

Complete task 32 at 11:57:52

Start task 33 at 11:57:52

Complete task 22 at 11:58:00

...When all 158 tasks have completed, we print each of the solutions along with the best solution found (like we do for the Serial version).

Importantly, even though the tasks can finish in a jumbled order, the multiprocessing library keeps track of the order in which we defined the tasks. The result data structure returned by our second call of the pool's map is in the defined task order, which makes output easier. This is a nice feature of the multiprocessing library.

MPI model

Setting up the MPI model requires two steps:

- Install an MPI environment. We're using Windows 11, so we installed the Microsoft MPI (MS-MPI). Specifically, we installed the executable version, msmpisetup.exe v10.1.3.

- Install the mpi4py library using pip, that is:

pip install mpi4py.

We run our MPI model from PowerShell, using a command like mpiexec -np 20 python -m mpi4py model-4-mpi.py. This is more complex than running the Multi model:

mpiexecis the name of the Microsoft MPI application.-np 20tells the MPI application to use 20 processes. We retrieve this parameter in the model usingprocessesToUse = MPI.COMM_WORLD.Get_size().-m mpi4pytells the MPI application to use the mpi4py library (which is also imported into our program).model-4-mpi.pyis the name of our model's program.

Figure 4 shows the main function of the MPI model. Each process is called a "worker", with the number of the current worker retrieved via MPI.COMM_WORLD.Get_rank(). Unlike with the multiprocessing library, we need to allocate tasks to the processes. Our code allocates the tasks as evenly as possible across the workers – some workers get n tasks, while the rest get n + 1 tasks. Each case allocated to a worker is then run in a loop.

worker = MPI.COMM_WORLD.Get_rank()

minTasksPerWorker = numRows // processesToUse # Divide the tasks as evenly as possible between the processes. Some processes will do n tasks

maxTasksPerWorker = minTasksPerWorker + 1 # And some processes will do n + 1 tasks

plusOneWorkers = numRows - (minTasksPerWorker * processesToUse)

stdWorkers = plusOneWorkers - 1

if worker <= stdWorkers:

caseFrom = worker * maxTasksPerWorker

caseTo = caseFrom + maxTasksPerWorker

cases = range(caseFrom, caseTo)

else:

caseFrom = (stdWorkers + 1) * maxTasksPerWorker + (worker - plusOneWorkers) * minTasksPerWorker

caseTo = caseFrom + minTasksPerWorker

cases = range(caseFrom, caseTo)

for case in cases:

Obj, Solution = tasks(case, worker)A key point to note is that, unlike multiprocessing, each worker runs the same whole program. That's why we need to identify the current worker and allocate tasks accordingly. We could also pass data between workers, if we wanted to coordinate their actions – though that isn't required in this situation.

Compared with the multiprocessing library, the MPI approach gives us more control over how the tasks are allocated to processes. But it also requires more effort from us.

The Serial and Multi models output a summary of the results at the end. We haven't found a simple way to do that within the MPI model. One method we've seen uses a batch program to run mpiexec then separately collates the results for presentation. We haven't implemented that approach here.

Performance of the models

Number of processes

We might assume that using more processes improves performance – but that it not necessarily the case. Our PC's CPU is an Intel Core i7 processor 14700KF. It has 20 cores: 8 "performance cores" that operate at a clock speed of up to 5.6 GHz, and 12 "efficient cores" that operate at up to 4.3 GHz. The performance cores have two threads each, for a total of 28 threads. The performance of our program depends on which core a task is assigned to.

Although the HiGHS solver is mostly single-threaded, it does sometimes use multiple threads. The Python environment and mpiexec application also use some of the CPU's capacity while the code is running. If we have many processes running at once, then they can complete for the PC's resources – for example, the CPU will spend time swapping tasks, rather than running them – which can reduce overall efficiency and increase run time.

Both the Multi and MPI models allow us to control the maximum number of processes to use. The result of varying this parameter, while running 158 cases each using the full 20,000 item data set, is shown in Figure 5. The run time is in seconds, and the CPU usage is a typical value. The Multi and MPI model run times are very similar.

As we increase the number of processes, the run time initially falls dramatically. Our Serial model, using only one process, takes 1 hour 41 minutes (6,055 seconds) to run all 158 cases. Restricting the Multi and MPI models to only one process produces about the same run time. Using two processes almost halves the run time. Using four processes almost halves the run time again. Note the "almost" qualifier – there is some overhead to using multiple processes, though it is proportionately small in this example.

The run time continues to decrease as we use more processes, though the incremental benefit reduces. The minimum run time occurs when we have around 16 to 20 processes, but then it rises slightly as we increase the number of processes to our maximum of 28. This behaviour is, presumedly, because our CPU has 20 cores / 28 threads. HiGHS and Python both do some multi-threading so, even when the number of processes is limited to one, our program is often running more than one thread. The CPU usage reaches 100% in total when running 20 processes, so having more processes has no additional benefit, and even has a small detrimental impact on total run time as the processes compete for resources.

Overall, using 20 processes, our parallel models are almost 10 times faster than our Serial model – taking 10 or 11 minutes rather than 101 minutes to complete all 158 cases. That's a great improvement, especially given the relatively small code changes needed to convert the Serial model to run in parallel.

Amdahl's law

Amdahl's law is often used in parallel computing to predict the theoretical speedup when using multiple processors. Essentially, Amdahl's law says that we should not expect the speedup to be proportional to the number of processors, due to fixed overhead and various inefficiencies in running the processes.

In our situation, getting a 10 times speedup by running 20 processes in parallel is a good result. It suggests that our procedure is quite efficient, with minimal fixed overhead. We could do better by optimizing the code – for example, by reading the data once, rather than reading it for each case. But that would significantly complicate the parallel models compared with the Serial model, while the gains would be small. In addition, having more CPU cores/threads should further reduce the run time, but it is likely that the improvement would be small relative to the improvement we've already achieved.

Note that "processors", as used in Amdahl's law, is different to the "processes" in our situation. In this context, a processor is a CPU core, while a process is a task run on the CPU cores, potentially using multiple threads.

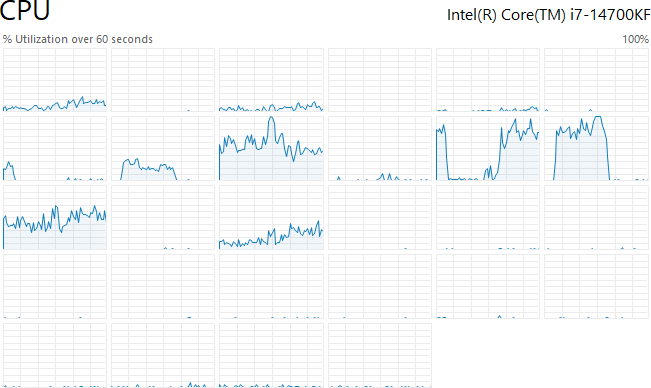

CPU utilization

Figure 6 shows a snapshot of each CPU thread's utilization while running a single process with the Serial model.

The combined CPU utilization is equivalent to a total of about two threads, spread across four threads. A small part of this utilization is various other applications, like the operating system and the Windows Task Manager, but most of it is Python and the HiGHS solver. When this snapshot was taken, 24 of the 28 available threads were doing essentially nothing.

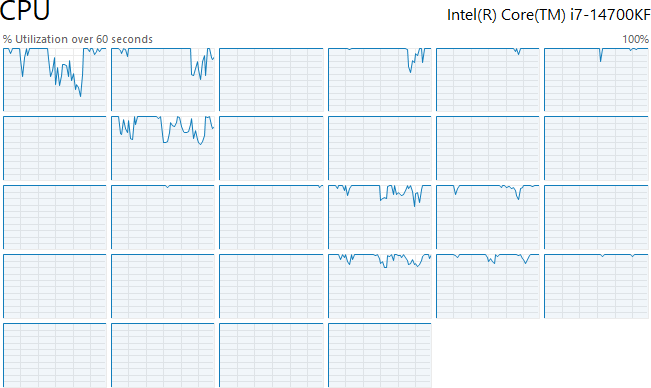

In contrast, Figure 7 shows the CPU utilization while running 20 processes with the Multi model. Again there is a small amount of utilization due to other applications, but 20 copies of Python and HiGHS are keeping the CPU very busy. Note that the 20 processes are using all 28 threads, reflecting the modest amount of multi-threading that is occurring. There are times when utilization drops below 100%, but only briefly and not by much in total.

If we use fewer than 20 processes, then the average CPU utilization is lower. For example, with 16 processes the CPU averages around 95% utilization and seldom reaches 100%.

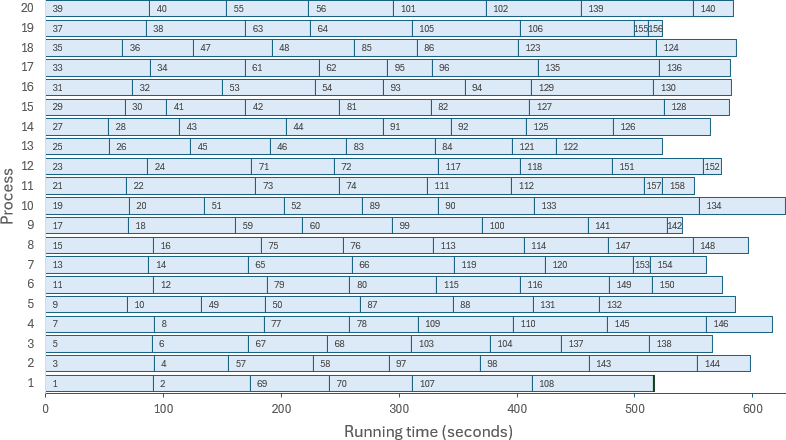

Allocation of tasks to processes

Figure 8 shows how the multiprocessing library allocated our 158 tasks to 20 processes. When a task completes, a new task is started immediately. The result is that Process 1 runs six tasks, while the other processes each run eight tasks. All the tasks are completed by 628 seconds.

Scheduling tasks could further improve parallel performance, maybe

Towards the end of the run, the processes get a bit ragged. This happens with both the Multi and MPI models. Figure 9 shows the number of processes running over time for the Multi model. At the beginning, the multiprocessing library immediately starts 20 processes. It then maintains 20 processes for 516 seconds. After that, as tasks complete, we have fewer and fewer processes running – until there is just one process running for the last 12 seconds, completing after a total of 628 seconds. Allowing for a bit of time to write the output, the total run time is 629 seconds.

It appears that the multiprocessing library decides the allocation of tasks to processes at least partially in advance. But if we could predict how long each process will take to run, and if we control how multiprocessing orders the tasks, then we could reduce the total run time. For example, if we allocate Task 134 to Process 1 instead of Process 10, then the run time would reduce by 12 seconds. Further rearranging of the task allocation could further reduce the total run time.

Scheduling tasks to reduce the run time is a type of optimization problem: the makespan minimization problem. The objective is to have all the processes complete as close as possible to the same time, to minimize the total elasped run time. Given the observed run time of each task, it is potentially possible to reduce the makespan from 629 seconds to 572 seconds – a further 10% reduction in run time.

However, in practice, exercising that much control over the program would be difficult (e.g., we would need to know whether a process is assigned to a performance or an efficient CPU core), and the run times of each process are uncertain rather than being known in advance (due to interactions between the processes competing for resources). Perhaps we could get a further 5% reduction in total run time, maybe. In this example, the effort isn't worthwhile – though in some circumstances it might be.

Old PC vs new PC

Specification comparison

The event that prompted writing this article was the purchase of a new modelling PC. Our old modelling PC is more than 10 years old. It is still adequate for general use, but it was time to upgrade to a more modern PC for model development. The new PC has a CPU with 28 threads, which makes parallel processing a viable strategy.

Some specifications for the old and new PCs are shown in Figure 10. The new PC has more CPU cores/threads, higher boost CPU clock speed, larger and faster memory, and a faster solid state drive (SSD).

It is important to note that running multiple processes may require a lot of memory. Each process has a copy of Python, the model, the data, the solver, and whatever else is needed to run the program. In this example, our model is fairly small, so each of our processes typically uses about 600 MB of RAM. With 20 processes, that amounts to a total of 12 GB of RAM. A larger model might require a lot of RAM to run multiple instances in parallel.

Performance comparison

Figure 11 shows the Serial and Multi model run times, for the old and new PCs, given each of the three data set sizes. For the parallel runs, the old PC is using 4 processes (about 30% faster than 3 processes), while the new PC is using 20 processes.

Running a single model instance on the new PC is, for the largest data set, around twice as fast as on the old PC. That's a result of improvements in CPU design, along with the other specification improvements. This means that, for example, the HiGHS solver is about twice as fast on the new PC compared with the old PC. This is a useful improvement, though not spectacular.

On the old PC, running the Multi model more than halves the run time compared with running the Serial model. That is expected, as the old PC is running up to four processes in parallel.

We get much better performance improvement from the new PC, partly because the processes run twice as fast, but mostly because it is running up to 20 processes in parallel. Consequently, on the new PC, the Multi model is almost 10 times faster than the Serial model.

The overall result is that, for the largest data set, the new PC running the Multi model is 19 times faster than the old PC running the Serial model.

Observations about using multiprocessing and mpi4py

Given our experience with converting our serial model to run in parallel, we make the following observations:

- Needing to run many cases is a common modelling requirement. It is usually done by running the cases serially on a single computer, or perhaps using multiple computers – often with a significant manual component for managing the cases. Automatically running cases in parallel on a single computer is potentially much more convenient and efficient.

- Running the 158 cases in parallel on the new PC reduced the run time from 1 hour 41 minutes (Serial model) to 10 minutes (Multi model) – an improvement of about 10 times faster.

- We use Python's multiprocessing and mpi4py libraries to manage the parallelization of our code. Both libraries can do a lot more than we describe in this article. Our example uses just enough of each library's functionality to run our cases in parallel.

- Only minor code changes were necessary. The optimization model is unchanged, which is useful. Essentially, all we did is call the parallel libraries with a list of the cases – though the multiprocessing and mpi4py libraries use somewhat different approaches to handling the cases. We also need slightly different handling of the output for each case. The code changes are straightforward.

- On our 20 core / 28 thread CPU, the optimal number of parallel processes is about 20. Running fewer processes in parallel doesn't use all the CPU's capacity. Running more processes slightly increases the total run time, due to competition for computer resources.

- Our model is small, and our computer has a good amount of RAM, so memory was not a constrained resource in this example. For a larger model, it may be necessary to have a lot of RAM.

- There is a substantial gain from having more cores/threads. While running tasks in parallel, compared with serially, an old 4 core / 4 thread CPU achieved a speedup factor of 2.3, while a new 20 core / 28 thread CPU achieved a speedup factor of 9.6.

- The parallel models' performance goes a bit awry towards the end of the run. After most tasks have been completed, there are no new tasks to start. Therefore, the number of running processes falls until only one process is running. Better scheduling of the tasks across the processes could, in theory, reduce the total makespan of the run. But that scheduling is, itself, an optimization problem that would require significant effort, along with data that may not be available. The scheduling would be easier to do using the mpi4py library. In practice, the incremental performance gain would likely be small – and might even exceed the time taken to solve a makespan minimization model.

- In creating the parallel models, we wanted to make minimal changes to the Serial model's code. If we made more extensive changes, then we could further improve the parallel performance. For example, each case reads the data from a file and extracts what it needs. It would be faster to read the file just once during the setup. But the performance gain would be small – around 0.2 seconds per case. Along with other improvements, we could potentially reduce the run time by one or two minutes. That doesn't seem worth the effort in this situation.

- Implementing the Multi model was easier than implementing the MPI model. That's mainly because our MPI model has to handle allocation of the tasks to the processes. But that's a fairly minor point. More importantly, when using the multiprocessing library, it is easier to do one-off tasks before and after running the cases in parallel. Consequently, we prefer the multiprocessing library over the mpi4py library – though that preference may change given different requirements.

- Note that we've focussed on running multiple cases in parallel. Solving a single model in parallel is an entirely different issue. Some solvers, like Gurobi and OR-Tools CP-SAT, do a significant part of their work in parallel. Other solvers, like CBC and HiGHS, are mostly single-threaded. Making a solver multi-threaded is a complex job.

Conclusion

In this article, we describe using Python's multiprocessing and mpi4py libraries to run optimization model scenarios in parallel compared with serially. Running cases in parallel allows us to greatly improve the performance of our scenario analysis.

The results are impressive: our 158 cases run 10 times faster. Reducing a model's run time by an order of magnitude is always welcome. Especially when converting our model to run cases in parallel is straightforward.

Given an appropriate computer, Python's multiprocessing and mpi4py libraries are potentially useful additions to an optimization modeller's toolkit. We prefer the multiprocessing library, because it is easier to implement. But both libraries do the job well, so the choice between them may depend on the exact requirements of a specific project.

If you would like to know more about this model, or you want help with your own models, then please contact us.