10 January 2024

Completing crossword puzzles is a popular pastime. There are many puzzle variations, ranging from simple to fiendishly difficult.

The process of creating a crossword puzzle – known as "compiling" – is difficult. Compiling a crossword can be thought of as a type of search problem, where we need to find a set of words that fits the rules for filling a specific crossword grid. Unsurprisingly, many crossword compilers use software to help them find a suitable set of words.

One approach is described in an excellent recent article on Philippe Olivier's blog: Generating crossword grids using Constraint Programming. That article inspired us to try using a different method: Mixed Integer Linear Programming (MILP).

We're not the first to consider using MILP to compile crosswords. An article published by Wilson in 1989 reported attempts to compile small crosswords using MILP models, as discussed in our previous article Solver performance - 1989 vs 2024. Wilson's conclusion was very pessimistic, noting that "the prospects of using integer programming for any type of puzzle of realistic size and with a substantial lexicon remain bleak". The problems were insufficient computer memory and the time need to solve even a small crossword grid using a then state-of-the-art, million-dollar superminicomputer.

But a lot has changed in the 35 years since 1989. Computers are thousands of times faster, and their memory capacity has increased by a similar factor. In addition, optimization solver software is literally millions of times faster. Models that were difficult to solve in 1989 are now trivial. Solutions that were impossible to find in 1989 may now be within reach. Does that include compiling crosswords of realistic size?

In this series of articles, we discuss:

- Representing a word puzzle in mathematical terms, enabling us to formulate the crossword compilation problem as a MILP model.

- Techniques for fine-tuning the model-building process in Pyomo, to make a model smaller and faster, including omitting constraint terms, skipping constraints, and fixing variable values.

Download the models

The models described in this series of articles are built in Python using the Pyomo library.

The files are available on GitHub.

Situation

We want to create a model that compiles crossword puzzles. That is, given a grid design and a word lexicon, find a set of words that fit in the grid while complying with the crossword's rules.

This situation is sometimes characterized as a constraint satisfaction problem, rather than as an optimization problem. That's because we generally just want a feasible solution rather than an optimal solution, as what constitutes "optimal" words in a crossword is a subjective assessment. Nonetheless, using a MILP with an objective function will allow us to tune the potential solutions.

There are many types of crossword. The key features of a crossword are:

- Shape. Most crossword grids are square, typically of size 11x11 or 15x15 cells. Though there are many variations, including rectangular, intersecting squares, and irregular. Most grids have an odd number of cells in each dimension. This seems to be because most designs have rotational and/or reflective symmetry or near-symmetry, which is easier to do with an odd number of cells without causing difficulties in the grid's centre.

- Shading. Cells that must contain letters are white, while cells that must be empty are black.

- Word direction. Each word is orientated in either an Across (left-to-right) or Down (top-to-bottom) direction. Some across and down words intersect, or crossover, at specific cells.

- Density. Some grids are very dense, with few black (empty) cells and many word intersections. Others are sparse, with each word having only one or two intersections with other words. Dense grids are often called American-style puzzles, while British-style puzzles are less dense. For a human completing a crossword, generally the higher the grid density, the more difficult the puzzle is to solve.

- Clues. The types of clues also vary, including dictionary-like definitions or cryptic clues. Some crosswords have a grid pre-populated with only a few letters, and no other clues. We'll ignore the clues, which can be added after the model has determined which words to place in the grid.

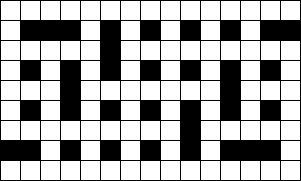

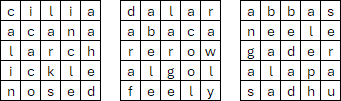

We'll focus on a classic square grid crossword (though our model will also handle rectangular grids). An example is shown in Figure 1. This is a 7x7 grid, in the British style. There are seven words (four across and three down). Every word intersects with at least two other words, with 10 crossovers in total. The grid density, the number of white cells as a percentage of total cells, is 63.3%.

So, we need a way to define a crossword grid. Then, given a lexicon of words, we want to populate the grid with words that form a valid crossword solution. How hard could it be?

Model design

Encoding the crossword grid

Given a 20 billion times speed increase of computers and solvers since 1989, we have reason to be optimistic that a MILP model can be used to solve at least some crossword puzzle compilation problems. But even now, this is not a simple problem.

We still have the key model design issue that Wilson faced in 1989: how to represent a word problem as a mathematical model. We haven't attempted to exactly replicate the MILP models proposed by Wilson in 1989. Instead, we take an approach that we think is better suited to a modern algebraic modelling language like Pyomo. Our approach is conceptually similar to Wilson's first model, which attempts to fit whole words into a grid.

A key challenge is representing the crossword grid, with its letters and intersecting words, in a way that a MILP can handle. We need a data structure that uniquely identifies each grid word position and each letter within a grid word. We also need to identify where the across and down words cross – an essential attribute of a crossword.

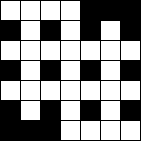

Our approach to encoding the crossword grid is illustrated in Figure 2, which shows an example 7x7 grid. For convenience, we encode each grid in an Excel file. That is, we encode a grid using four matrices:

- Across reference. Each "across" word is numbered, with each letter of a word having the same number. Each word must have a unique number, and the numbers must be consecutive starting at 1. The position of each word's number in the grid is arbitrary, though we generally number the words from top-left to bottom-right.

- Across position. For each across word, we number each character from left-to-right.

- Down reference. As for the across reference, but for the "down" words. Some crosswords use numbering like "1 across" and "1 down". That style is not permitted in our encoding – the words (across and down combined) must have unique, consecutive numbers.

- Down position. As for the across position, but for the down words.

In addition:

- Cells that must contain a letter are white, and cells that must not contain a letter are black. The is purely cosmetic, as the cell colours are not used by the model.

- All cells must contain a number.

- The black cells must contain zero (formatted as a dash).

- White cells that are not part of a word in a specific direction must also contain a zero.

Given a grid design, much of the encoding could be automated – for simplicity, we have created our example grids manually.

Crosswords are usually square, though not always. Our model allows for any rectangular shape – like the 7x7 grid in Figure 2 or the 9x15 grid at the top of this article.

Gutenberg lexicon

We need to provide a list of words – a lexicon – that the model can use to populate the grid. In our modelling, each word is called a "candidate". A larger lexicon will provide more variety in the crosswords and improve the odds of there being a feasible solution for a given grid. However, a larger lexicon will make the model larger, potentially greatly increasing the solution time.

For testing our model, we chose a lexicon consisting of words from books found on Project Gutenberg. After removing words that contain punctuation characters and digits, along with removing words of one character and more than 15 characters (an arbitrary upper limit), there are 35,259 words in our lexicon. We also made all words lowercase. The lexicon is stored in Excel.

A useful feature of the Gutenberg lexicon is that it has the frequency of use for each word, which we can use in an objective function for choosing more or less common words.

The distribution of the Gutenberg lexicon's word length is shown in Figure 3. There are 109 words of length 15 characters, and around 5,000 words each for lengths of 6, 7, and 8 characters.

Large lexicon

For some examples we want to use a larger lexicon. The alpha word list from List of English words contains 359,039 words, after removing words of 1 or 16+ characters. We added 1,567 words that are in the Gutenberg lexicon but not the alpha word list, for a total of 360,606 words.

This lexicon didn't have frequency data, so we created a value based on the frequency of letters. The details of how that was done aren't important, as the frequency column doesn't help much.

The distribution of the large lexicon's word length is shown in Figure 4. This lexicon includes more words of every length, especially the longer words. There are more than 50,000 words for lengths 8 and 9 characters.

Encoding characters

Words, and the characters they consist of, are the essence of a crossword puzzle. To work with words in our model, we encode each character as an integer using its ASCII code. That is, the character "a" has ASCII code 97 and the character "z" has ASCII code 122. We use only characters a to z (all lowercase), though any characters could be used.

Python has functions for working with ASCII codes, which makes the encoding process straightforward.

Crossword grid rules

Our model needs to represent the rules for compiling a crossword grid. For the classic crossword design that we're modelling, the rules are:

- Each position once. We may allocate exactly one word from the lexicon to each word position in the grid.

- Each word once. Each word in the lexicon may be allocated to only one grid position. We could omit this rule to allow a word to be used more than once, so this rule is optional. For the specific "single word square" variant we'll look at soon, this rule will need to be modified.

- Words fit. A word allocated to a grid position must exactly fill the whole position. For example, if a grid word position is six characters long, then we must use a word from the lexicon that is six characters long. Some crossword designs do not adhere to this rule – for example, American-style crosswords often use compound words, like "gridmodelsolver", to fill some grid word positions. We'll stick to using only whole words.

- Crossover. The cells where across and down words intersect must have the same letter.

Objective function

Since we're using an optimization model, we need an objective function.

As a starting point, we'll optimize the frequency of the words allocated to the grid. For the Gutenberg lexicon that means preferring words based on their frequency of use. For the large lexicon that means preferring words based on the frequency of their letter appearance in the lexicon.

We may choose, via an option in the model, to minimize or maximize the objective function. However, we mostly stop at the first feasible solution, so we don't make much use of the objective function. We do this by setting the solver's "MIP gap" option to a large number – the specific number needed depends on the solver. When using Gurobi, we can also set a specific option that instructs the solver to stop at the first feasible MIP solution.

Model 1 Formulation

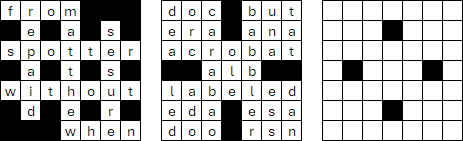

We formulate the crossword design rules as a mixed integer linear program, as shown in Figure 5. That is:

- Equation (1). The objective function maximizes (or minimizes) the total frequency of the allocated words, as specified in the lexicon. This is an arbitrary objective that doesn't help the solver much. Essentially, we're looking for a feasible solution rather than a truly optimal solution.

- Equation (2). Each position once, as defined above.

- Equation (3). Each word once, as defined above.

- Equation (4). Words fit, as defined above.

- Equation (5). Crossover, as defined above. Our notation is succinct and only a sketch of what the constraint does. Refer to the Python code to see exactly what this constraint means, given the grid encoding and ASCII coding of the candidate words.

We acknowledge that our specification of the crossover constraint is a bit loose – we have attempted to show the intent rather than the exact math. But we have also published the code, so you can see exactly how the constraint is implemented in a working model. Having the code helps you understand the model and enables you to make any changes you want to make.

We wish published academic papers would include working code and data for their models, in addition to the mathematical formulations. In many papers, the math is poorly defined and hard to understand – often it is just plain wrong. The lack of code in most academic papers makes them difficult and time-consuming to replicate, which greatly undermines their value.

The model's binary variables are a matrix with size number of candidate words by number of grid word positions. The model grows quickly as we increase the dimensions of the grid and the size of the lexicon. For example:

- Given a lexicon of 1,000 words for the 7x7 grid in Figure 1, Model 1 has 7,000 binary variables.

- A larger grid might have dozens of words and we might use a lexicon with tens of thousands of words. Model 1 would have hundreds of thousands or millions of binary variables.

- A grid with 100 words, using all 360,000 words in the Large lexicon, would have 36 million binary variables – which is a very large model.

Implementation

Data preparation

There's a lot of data preparation needed to create the structures used in Model 1. The essential tasks include:

- Read the grid encoding.

- Read the lexicon.

- Encode each word in the lexicon as ASCII codes.

To manage the model size, we include a facility to take a random sample of the lexicon, using the parameter SampleSize. We also specify a random seed and number of iterations to run. Each run of the model uses a different random seed, starting at RandomSeed and incrementing by 1 each run up to a maximum of MaxIterations runs.

In most instances we use a sample size of a few thousand words, selected from one or other lexicon. In some cases, we use the full Gutenberg lexicon, or up to 50,000 words from the Large lexicon. More words mean a larger model, which may take much longer to solve. But too few words may make a puzzle infeasible.

Pyomo model

The Pyomo code, as shown in Figure 6, is an almost direct translation of the formulation.

The complicated part is the Crossover constraint, where we ensure that across and down words that intersect have the same letter in the crossover cell. We do this by comparing each grid word with each other grid word, but only if they intersect (that's what the if part of the constraint does). If the words do intersect, then the ASCII codes (letters) in all intersection cells must be equal.

The crossover constraint is the reason why we encode the grid using reference and position arrays. That is, we need to know if grid words intersect and, if so, which cells intersect.

def DefineModel(Model):

Model.Allocation = pyo.Var(Model.Candidate, Model.GridWords, within = pyo.Binary, initialize = 0) # Allocate candidate words to grid

def rule_PosOnce(Model, g): # Allocate exactly one candidate to each grid word position

return sum(Model.Allocation[c, g] for c in Model.Candidate) == 1

Model.EachPositionOnce = pyo.Constraint(Model.GridWords, rule = rule_PosOnce)

def rule_WordOnce(Model, c): # Allocate each word to a grid position at most once. Optional constraint

return sum(Model.Allocation[c, g] for g in Model.GridWords) <= 1

Model.EachWordOnce = pyo.Constraint(Model.Candidate, rule = rule_WordOnce)

def rule_Fit(Model, g): # Ensure word exactly fills its allocated grid space

return sum(Model.Allocation[c, g] * Model.Length[c] for c in Model.Candidate) == Model.GridLengths[g]

Model.WordsFit = pyo.Constraint(Model.GridWords, rule = rule_Fit)

def rule_Intersection(Model, g1, g2, w, h): # The intersection of grid words must have the same letter

if pyo.value(Model.AcrossRef[w, h]) != 0 and pyo.value(Model.DownRef[w, h]) != 0 and g1 == pyo.value(Model.AcrossRef[w, h]) - 1 \

and g2 == pyo.value(Model.DownRef[w, h]) - 1:

return sum(Model.Allocation[c, g1] * Model.Word[c, pyo.value(Model.AcrossPos[w, h]) - 1] for c in Model.Candidate) == \

sum(Model.Allocation[c, g2] * Model.Word[c, pyo.value(Model.DownPos[w, h]) - 1] for c in Model.Candidate)

else:

return pyo.Constraint.Skip

Model.Crossover = pyo.Constraint(Model.GridWords, Model.GridWords, Model.GridWidth, Model.GridHeight, rule = rule_Intersection)

def rule_Obj(Model):

return sum(sum(Model.Allocation[c, g] for g in Model.GridWords) * Model.Frequency[c] for c in Model.Candidate)

Model.Obj = pyo.Objective(rule = rule_Obj, sense = pyo.maximize)The main reason we use def functions when writing Pyomo models is so that we can include conditions when building constraints or the objective function – like we do with the crossover constraint. Often models don't need conditions, but it is helpful to use a consistent structure anyway. We'll use more complex conditions in Model 2.

Note that the if condition used in building the crossover constraint uses only data. It cannot use variables to make decisions about which terms to include in a constraint, as variable values are unknown when constructing the model. Also, to allow for the possibility that the conditions exclude all constraint terms given specific values of grid words and cells (indicated by width and height coordinates), we include an else clause that skips empty instances of the constraint.

Solver selection

We thank Gurobi Optimization for providing an evaluation version of their solver for this article.

We thank Gurobi Optimization for providing an evaluation version of their solver for this article.

For MILP models, we mostly use the open-source HiGHS solver – because it is freely available to our readers. Most of the development of this crossword modelling was done using HiGHS. In many cases, HiGHS finds solutions to our crossword problems quickly. However, as we increase the size and complexity of the crosswords, it becomes more difficult for HiGHS to find solutions in a reasonable time.

Therefore, we switched to using the commercial Gurobi solver. Gurobi is generally faster than HiGHS, in some cases many times faster, and it enables us to compile crosswords that would be very difficult to do using the HiGHS solver.

The choice of solver is specified in the model notebook as either SolverName = 'gurobi_direct' or SolverName = 'appsi_highs', assuming both solvers are installed.

Compiling crosswords using Model 1

Starting small: 4x4 grid

To test Model 1, we start with a very small crossword: a 4x4 grid, like that used in Wilson's 1989 paper, as discussed in our previous article Solver performance - 1988 vs 2023.

Because the 4x4 grid has no black cells, it is, strictly speaking, a double word square rather than a traditional crossword. However, the rules for a double word square are the same as those we've implemented in Model 1. The differences are that a word square has 100% density and every cell is a crossover.

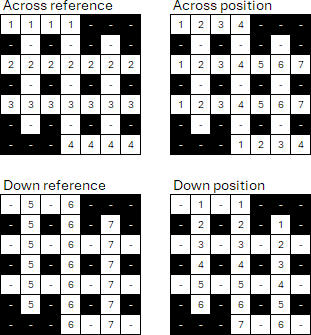

Model 1 finds many solutions for the 4x4 double word square. A selection of the solutions, found using different random seeds and a sample of words from either the Gutenberg or Large lexicon, is shown in Figure 7. These solutions have four letter words across all rows and down all columns. Some of the words are obscure, which is just a reflection of the words in the lexicons.

For example, we can use a sample size of 1,000 words extracted randomly from the more than 7,000 4-letter words in the Large lexicon. Model 1, running the Gurobi solver, finds most solutions for the 4x4 grid in a few seconds or less, though some cases take about a minute.

Looking ahead to Model 2, which we'll discuss in the next article, with a 1,000-word lexicon Gurobi takes 12 seconds to find 10 solutions to the 4x4 double word square puzzle – i.e., an average of just over 1 second per solution. For a 4x4 grid, Model 2 typically takes longer to load the data and build the model in Pyomo than it does to solve the model.

Wilson would be impressed. His modelling in 1989, running on a million-dollar superminicomputer (compared with our very ordinary desktop PC), took half an hour to find a single solution from a lexicon of 100 4-letter words. His model has 800 variables and 524 constraints, which was described as "fairly large for an integer program". Our Model 1, with a 1,000-word lexicon, has 8,000 variables and 1,024 constraints, which we describe as small by today's standards.

Larger double word squares

Double word squares are an especially difficult form of crossword – because they are 100% dense and have many crossover letters. The largest known double word square, using English words, is on an 8x8 grid (larger examples have been constructed using other languages).

Using Model 1, we found many 5x5 double word squares, like those in Figure 8.

We were able to find double word squares larger than 5x5 only when using a fairly small lexicon (e.g. 1,000 words) that we loaded with words known to form a double word square.

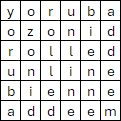

Single word squares

A simpler form of the word square has the same words across and down – known as a "single word square", or just "word square".

To find single word squares, we must modify Model 1. Firstly, we need to allow repeated words, since the same words appears across and down. That's easy, we just change the right-hand-side of Equation (3) to be \(\le 2\) rather than \(\le 1\).

Forcing the symmetry of across and down words requires adding a new constraint:

def rule_Symmetry(Model, g): # Ensure that across words = down words, for creating single word squares

NumWords = len(Model.GridWords) # Always an even number in a square grid

if g <= NumWords / 2 - 1:

return sum(Model.Allocation[c, g] for c in Model.Candidate) == sum(Model.Allocation[c, g + NumWords / 2] for c in Model.Candidate)

else:

return pyo.Constraint.Skip

Model.Symmetry = pyo.Constraint(Model.GridWords, rule = rule_Symmetry)This version of the model is somewhat easier to solve. Even so, the largest example we found – after many attempts – is the 6x6 grid shown in Figure 10.

The largest known English language "perfect" single word square is a 10x10 grid, discovered in 2023. The square is "perfect" in the sense that it contains no proper names, capitalised words, hypenated words, or tautonyms. That solution was found by a specialized search algorithm using a lexicon of 364,915 10-letter words.

Our Large lexicon has "only" 45,947 10-letter words (some of which do not comply with the "perfect" word requirements), including just two of the ten words in the 10x10 word square recently discovered. It is extremely unlikely that our Large lexicon contains words that form a feasible 10x10 single word square.

In any case, Model 1 is not well-suited to compiling single or double word squares. There are much more efficient search algorithms for that task. Our intent is to compile traditional crosswords, rather than word squares, so from here on we'll focus on traditional crossword grids.

7x7 crosswords

We now look at a 7x7 grid, which is quite small for a traditional crossword puzzle.

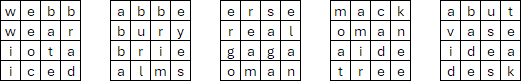

Figure 11 shows three different 7x7 grids. The grid on the left is the same as in Figures 1 and 2. It is a British-style crossword, with 7 words, 10 crossovers, and density of 63%. Using Model 1, finding solutions for this grid is easy, given a sample lexicon of several thousand words. The Gurobi solver finds many solutions in less than 1 second each, after a few seconds for our Python code to read the data and build the model.

The middle grid is more difficult, because it is denser and has more word crossovers. It is an American-style crossword, with 22 words, 41 crossovers, and density of 84%. We found several solutions, though they typically take at least several minutes using the Gurobi solver, even when using a small lexicon sample of a few thousand words (though most samples are infeasible at that lexicon size).

The grid on the right is even more dense – it is almost a word square. It has 18 words, 41 crossovers, and density of 92%. We failed to find any solutions for this grid with Model 1.

Next steps: Larger crosswords

Model 1 works as designed. It can find solutions for small crosswords, but it struggles as the grids get larger and/or denser, and as the size of the lexicon increases.

The main issue with Model 1 is that we formulate general constraints and simply let the solver try to work out how to find a solution. As the puzzles get more difficult, that strategy doesn't work well. But we can do better, by using our knowledge of the problem situation to tighten the model and make the task easier for the solver.

In the next article, we develop a more sophisticated model that will enable us to compile solutions for larger crossword grids.

Conclusion

In this article, we develop a mixed integer linear program for compiling crossword puzzles.

Model 1 works well for compiling small, relatively simple traditional crossword puzzles using a lexicon of a few thousand words. It struggles to compile medium-sized single and double word squares, though that style of crossword isn't our focus. Model 1 also struggles to compile traditional crosswords as the grid gets larger/denser, and as the lexicon size increases.

In the next article, we develop a more sophisticated model with the intent of finding solutions for larger and more complex crossword puzzles.

If you would like to know more about this model, or you want help with your own models, then please contact us.

References

Generating crossword grids using Constraint Programming, Philippe Olivier, 10 October 2023.

Crossword compilation using integer programming, J. M. Wilson, The Computer Journal, Volume 32, Issue 3, 1989, Pages 273–275.