10 January 2024

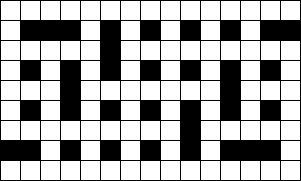

Completing crossword puzzles is a popular pastime. There are many puzzle variations, ranging from simple to fiendishly difficult.

The process of creating a crossword puzzle – known as "compiling" – is difficult. Compiling a crossword can be thought of as a type of search problem, where we need to find a set of words that fits the rules for filling a specific crossword grid. Unsurprisingly, many crossword compilers use software to help them find a suitable set of words.

One approach is described in an excellent recent article on Philippe Olivier's blog: Generating crossword grids using Constraint Programming. That article inspired us to try using a different method: Mixed Integer Linear Programming (MILP).

We're not the first to consider using MILP to compile crosswords. An article published by Wilson in 1989 reported attempts to compile small crosswords using MILP models, as discussed in our previous article Solver performance - 1989 vs 2024. Wilson's conclusion was very pessimistic, noting that "the prospects of using integer programming for any type of puzzle of realistic size and with a substantial lexicon remain bleak". The problems were insufficient computer memory and the time need to solve even a small crossword grid using a then state-of-the-art, million-dollar superminicomputer.

But a lot has changed in the 35 years since 1989. Computers are thousands of times faster, and their memory capacity has increased by a similar factor. In addition, optimization solver software is literally millions of times faster. Models that were difficult to solve in 1989 are now trivial. Solutions that were impossible to find in 1989 may now be within reach. Does that include compiling crosswords of realistic size?

In this series of articles, we discuss:

- Representing a word puzzle in mathematical terms, enabling us to formulate the crossword compilation problem as a MILP model.

- Techniques for fine-tuning the model-building process in Pyomo, to make a model smaller and faster, including omitting constraint terms, skipping constraints, and fixing variable values.

5 January 2024

In our previous article, we solved a mixed integer linear program (MILP) model with more than 8 million binary variables. Not long ago, a model of that size would have been unsolvable. Yet, once we had a computer with sufficient memory, the model was solved to optimality by an open source solver in 3 hours.

Of course, not all large models are solvable – even some small models are unsolvable, or at least hard to solve. Nonetheless, modern solvers, in association with modern computers, enable us to solve models that not-so-long-ago were beyond our capabilities.

In our next article, we look to compile crosswords using a MILP model. We're not the first to consider using a MILP model to compile crosswords. An article published in 1989 reported attempts to compile small crosswords using MILP models. The article's conclusion was very pessimistic, noting that:

...the prospects of using integer programming for any type of puzzle of realistic size and with a substantial lexicon remain bleak.

Wilson, 1989, Crossword compilation using integer programming

The problems Wilson encountered were insufficient computer memory and the time needed to solve a very small crossword grid, even though he was using a then state-of-the-art million dollar superminicomputer.

But a lot has changed in the 35 years since 1989. Computer hardware is many times faster, and memory capacity has increased enormously. In addition, optimization solver software has improved by orders of magnitude. Models that were difficult to solve in 1989 are now trivial. Solutions that were impossible to find in 1989 may now be within reach.

In this article, we estimate the magnitude of speed improvement for optimization solvers and computer hardware in the 35 years from 1989 to 2024. The results may be surprising.

13 December 2023

We conclude our article series looking at a common modelling issue: The solution is optimal, but not practical.

In a previous article of this series, we solved Model 3 to optimality with the full data set of 100 items. Given the large memory requirements of Model 3, a data set with 100 items is close to the maximum that we can solve on our modelling computer. But what if we need to solve a larger data set?

Model 4 provides one approach, using a heuristic random column generation technique that finds very good solutions, though they are not guaranteed to be globally optimal.

The variants of Model 5 all have even more variables and constraints than Model 3, so they don't help us solve larger data sets.

To find guaranteed, globally optimal solutions we need to use Model 3. That requires a computer with more memory – potentially a lot more memory. We could upgrade our modelling computer. If there's a need to often solve very large models, then that would likely be the best option. But if the need is less frequent, then a potentially cost-effective solution is to use a virtual machine in "the cloud".

In this article, we adopt the latter approach. We describe, in detail, setting up a Google Compute Engine, provisioned with 128 GB RAM, and using it to solve Model 3 to global optimality with a data set that is larger than we can solve on our local computer.

1 December 2023

We continue our article series looking at a common modelling issue: The solution is optimal, but not practical.

In the previous article of this series, we explicitly formulated either/or constraints by manually creating \(BigM\) constraints. That technique works, though it can be a bit tricky to get right.

An alternative approach, since we're using Pyomo, is to use the Generalized Disjunctive Programming (GDP) extension that is part of Pyomo. GDP can represent a variety of logical implications, like either/or, and automatically translate them into a form that a solver can work with.

In this article, we describe how the GDP extension can be used to represent either/or constraints in a simple linear program. Along the way, we compare \(BigM\) constraints that we create manually with \(BigM\) constraints created automatically by the GDP extension. Then we use the GDP extension to build either/or constraints in our paper coverage situation, as variant Model 5c.

21 November 2023

We continue our article series looking at a common modelling issue: The solution is optimal, but not practical.

In the first article of this series, we made the assertion that we can implicitly mimic rotation of the items by sorting the item dimensions so that the width is always the longer side. Therefore, we didn't need to explicitly represent the orientation of the items in our earlier models.

This insight may not be obvious, so in this article we explicitly model rotation of the items using either/or constraints. That is, we want to represent items that are in either portrait or landscape orientation, with the model choosing only one of the orientations for each product.

The problem is that, in a mathematical programming model, all constraints are always evaluated. So, we can't have a condition like:

If orientation is portrait

then use constraint for portrait

else use constraint for landscape

Instead, we need to create a pair of constraints that behave the way we want, while both are always evaluated. This is done using a binary variable, which acts as a switch to decide which constraint is "active", and some \(BigM\) parameters that change each constraint's right-hand-side in a very specific way.

Our objectives in this article are to:

- Illustrate how to formulate and implement either/or constraints in an integer programming model, using \(BigM\) constraints.

- See how our model behaves when explicitly including either/or constraints.

- Demonstrate that our dimension sorting assertion is correct.

11 November 2023

We continue our article series looking at a common modelling issue: The solution is optimal, but not practical.

In the previous article, we built Model 3 for our paper coverage problem – a linear model that enumerates all possible stock paper size combinations, then chooses the sizes that minimize trim waste. Model 3 finds globally optimal solutions for each case, so we've solved the original problem. But, from a modelling perspective, there's more we can learn from this situation.

An issue with Model 3 is that it is close to the maximum size we can solve, due to memory usage. This is somewhat unusual – normally, we're concerned about the time to solve a model, but for this model the concern is the amount of memory it needs. Specifically, the number of variables and the amount of memory used both increase with the cube of the number of items. For example, an instance with 100 items has more than 1,000,000 variables, and the HiGHS solver uses up to 7.5 GB of RAM. If we double the number of items, to 200, then the model would have more than 8,000,000 variables and require more RAM than our PC has (we tried).

So, if we can't use Model 3 with a larger data set, then what can we do? Model 2 found good, though mostly sub-optimal, solutions by considering only a 1% subset of the possible stock product combinations. In this article, we develop Model 4 which extends Model 2 by adding randomly generated stock product candidates. The idea is that the solver might be able to find better solutions using a larger subset of candidates, without needing to consider every possible candidate simultaneously.

Our approach for Model 4 is a type of random column generation technique. Strictly speaking, we're not doing standard column generation, which uses a sub-model to decide which columns to add. Instead, we randomly generate columns, solve the model, then repeat some number of times while noting the best solution found. This approach isn't guaranteed to find globally optimal solutions, but it should get close.

4 November 2023

We continue our article series looking at a common modelling issue: The solution is optimal, but not practical.

In the previous article, we built Model 2 for our paper coverage problem – a linear model that considered a subset of the possible stock paper size combinations. Model 2 found optimal solutions for each case in around 2 seconds, which is an enormous improvement compared with the performance of the non-linear Model 1. However, because we use only a subset of the possible combinations, Model 2's solutions are not globally optimal.

In this article, we extend Model 2 to consider all possible combinations of stock paper sizes. Consequently, Model 3 is quite large, with more than 1 million binary variables. If we can solve this model, then it should produce globally optimal solutions.

24 October 2023

We continue our article series looking at a common modelling issue: The solution is optimal, but not practical.

In the previous article, we identified a theoretically perfect solution that is impractical to implement due to its complexity. We then developed a non-linear model of the situation, limited to just a few stock product sizes, but that model was difficult to solve.

In this article, we formulate and implement a linear model, with the hope that it will be easier to solve. We focus on considering only a partial set of potential solutions, as a step towards a full linear model.

10 October 2023

We address a common modelling issue: The solution is optimal, but not practical.

In our situation, the stated objective is to minimize trim waste in our paper manufacturing process. This is a type of coverage optimization problem. There is a theoretically perfect solution, with zero waste, but it is impractical to implement due to its complexity.

So, in reality, we have two intertwined objectives that we need to satisfy:

- Quantitative: Minimize trim waste in our manufacturing process.

- Qualitative: Make the solution simple and practical.

We can model the quantitative objective, but the qualitative objective has at least equal importance. Having an optimal, but impractical, solution is common in real-world optimization problems. The conflict between perfect and practical makes our situation both challenging and interesting.

In this series of articles, we use a variety of techniques to create several models. Along the way, we address the trade-off between our quantitative and qualitative objectives, enabling the decision maker to make an informed decision to implement an efficient and practical solution.

8 September 2023

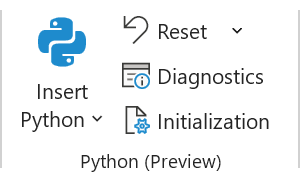

Microsoft recently announced a new Excel feature, Python embedded within Excel. This is great news, as it combines our two favourite tools: Excel and Python. Since our focus is on optimization modelling, we immediately wondered if it is possible to use a Python library to solve optimization models directly within Excel. Spoiler alert, it is!

In this article, we experiment with building and solving a linear program in Excel using the Python SciPy library. Specifically, we replicate the Production Mix model that we previously solved using SciPy as part of our Rosetta Stone series of articles that looked at various Python optimization tools.